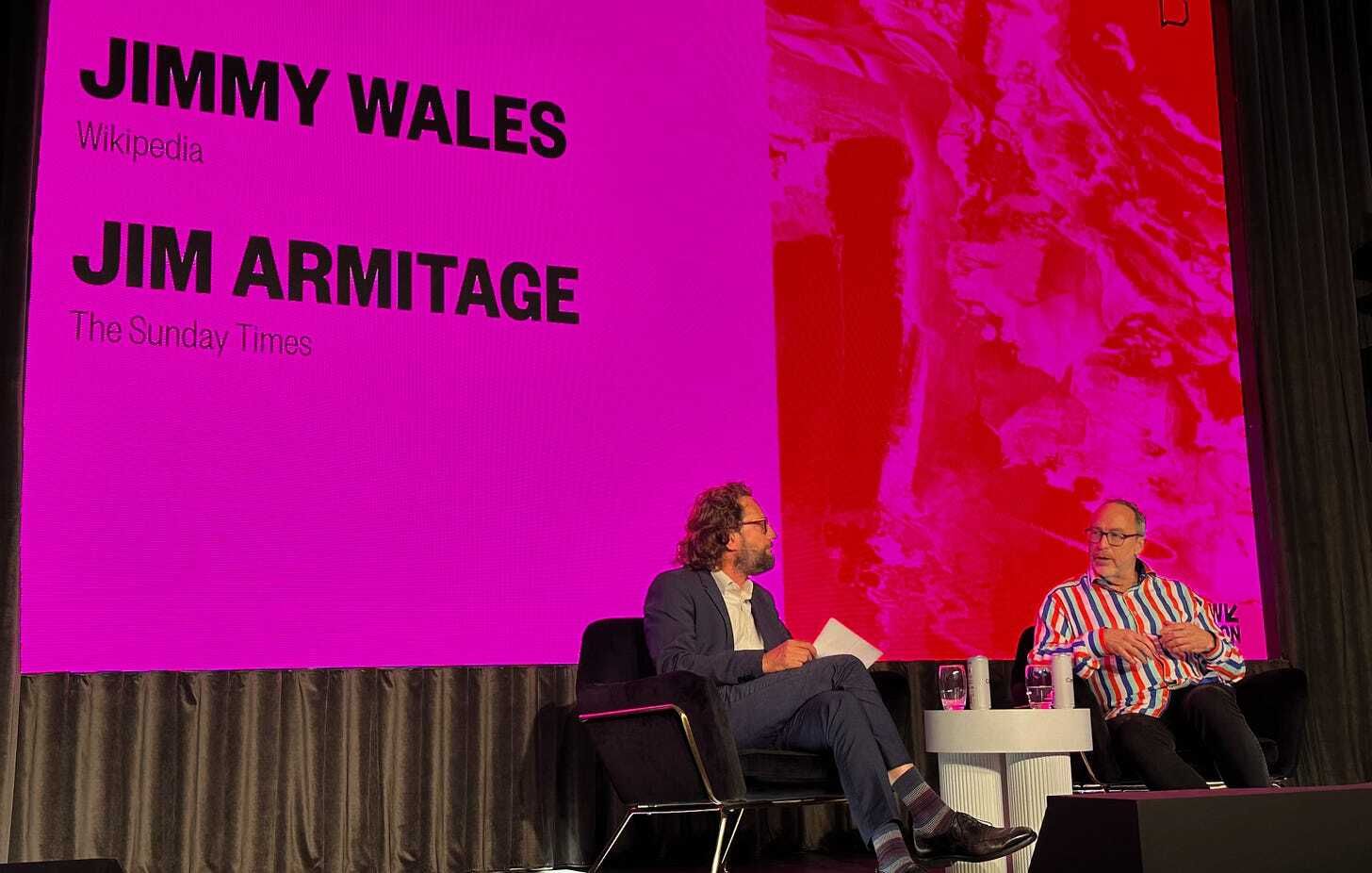

At the South by Southwest London conference last month, I had the pleasure of listening to Wikipedia founder Jimmy Wales in a fireside chat with Jim Armitage, contributing editor at The Sunday Times. This was the first time I had seen Wales live since I interviewed him in 2006 for the German daily newspaper Die Welt while he was visiting Bonn, Germany. My whole interview from then is no longer on the website, but here’s an excerpt (in German).

In 2006, Wales talked to me about cutting edge aspects of that era such as swarm intelligence (he didn’t believe in it) and the creative commons licensing model which was still quite new. On the SXSW stage in London, the major topic was AI. Wales revealed how Wikipedia is quietly testing AI tools to defend against fabricated sources while building better ways to verify neutrality and detect bias. Meanwhile, the Wikimedia Foundation (the non-profit organization that owns and operates Wikipedia and other Wikimedia projects) is launching an official strategy that doubles down on human editors.

Wikipedia is experimenting with artificial intelligence to strengthen the encyclopedia's core mission of neutrality and factual accuracy. Rather than using AI to generate content, Wales has built tools that analyze existing articles against their sources, flag potential bias, and help editors spot fabricated references that increasingly slip through traditional review processes. His approach reveals a pragmatic philosophy: use AI to support human judgment, never replace it.

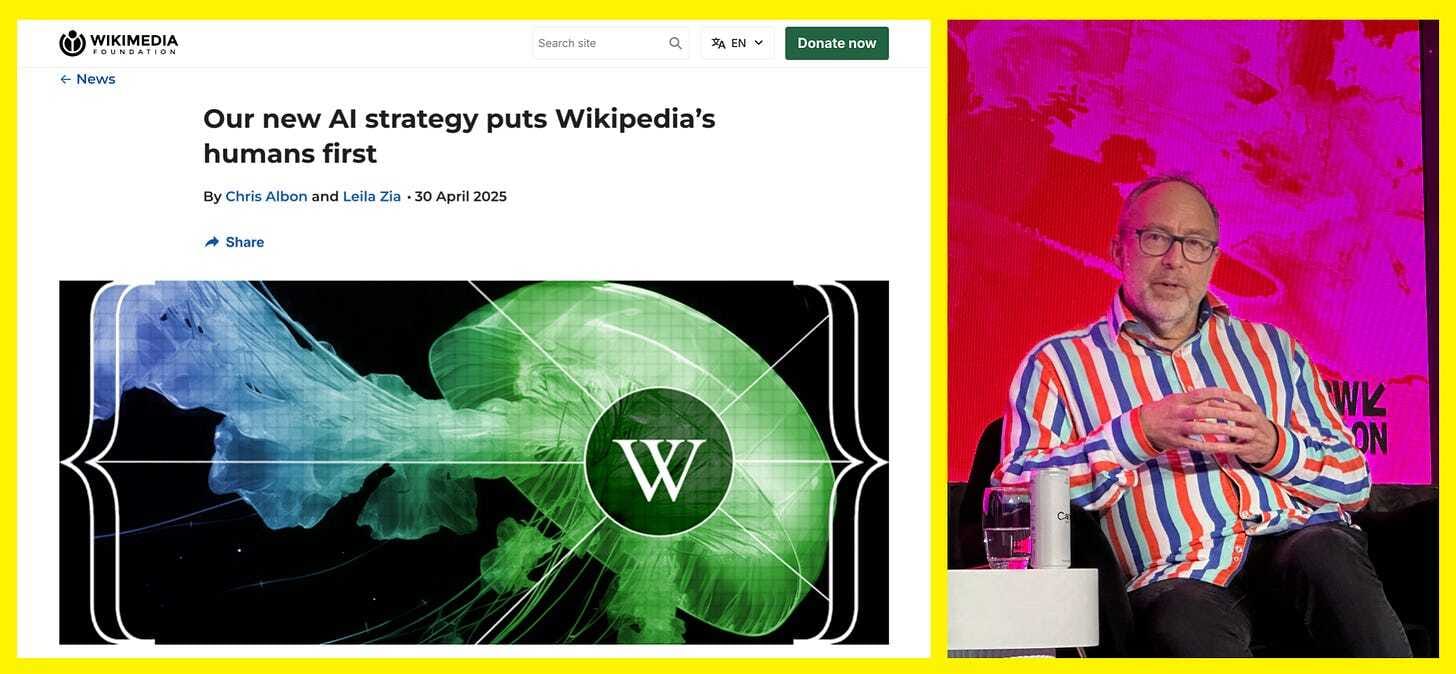

This personal experimentation aligns with the Wikimedia Foundation's official AI strategy, announced in April 2025, which prioritizes supporting human editors over automation. The strategy represents a coordinated response to mounting challenges from AI-generated misinformation that has begun infiltrating the platform.

Combatting Fake ISBN Numbers and Other Fabricated Sources

Wikipedia is encountering a new category of misinformation: well-meaning editors unknowingly adding AI-generated fake sources. Wales shared a telling example from German Wikipedia, where a volunteer discovered multiple invalid ISBN book references from a single editor.

"The guy as it turned out was apparently good faith editing, and he was using ChatGPT," Wales explained at SXSW London. "He looked up some sources in ChatGPT, and just put them in the article. Of course, ChatGPT had made them up absolutely from scratch."

The fabrications are sophisticated enough to fool casual inspection. Wales described asking ChatGPT for cooking recipes with links to famous chef websites. All of the links led to 404 not found error messages. “But when I looked a little closer, they were all in the shape of ‘Jamie Oliver/menu/recipe/whatever’. The URLs looked real, even down to that level of detail, even though they were absolutely fictional."

This problem extends beyond individual editors making mistakes. According to the Wikimedia Foundation's April 2025 strategy document, "thousands of hard-to-detect Wikipedia hoaxes and other forms of misinformation and disinformation can be produced in minutes," requiring new approaches to content verification.

The challenge got worse during the last presidential election cycle and it is fundamentally different from the "fake news" sites that appeared during previous election cycles. Those sites could be spotted by Wikipedia's experienced editors because "looking plausible isn't enough to trick people who spend their lives literally obsessively debating quality of sources," Wales noted. AI-generated sources, however, pass initial credibility tests while being entirely fictional.

Wales's Experiments With AI Verification Tools

Wales has developed his own AI-powered analysis system that demonstrates Wikipedia's approach to AI augmentation. His tool takes a Wikipedia article, extracts all of its sources, and asks two key questions: "Is there anything that isn't in the article that could be based on these sources, and is there anything in the article that's not supported by the sources?"

The system shows early promise for bias detection as well. "Feed it an article and all the sources and say, 'Is this article biased on anything compared to the sources?'" Wales explained at SXSW London. "It won't catch everything, and it'll be wrong sometimes.” But the tool seems to be good enough to assist human editors in a meaningful way.

However, the human-in-the-loop principle remains critical. "You wouldn't let it edit Wikipedia, because, my God, it makes stuff up left and right," Wales said. This reflects Wikipedia's core philosophy that AI should flag potential issues for human review, not make editorial decisions.

As reported by TechCrunch in April 2025, Wikipedia "intends to use AI as a tool that makes people's jobs easier, not replace them." The organization explicitly rejected the idea of replacing human editors with AI, instead focusing on "specific areas where generative AI excels" to support human decision-making.

Official Strategy: Enable Human Accomplishments

The Wikimedia Foundation's AI strategy formalizes this human-centered approach. Chris Albon, Director of Machine Learning at Wikimedia Foundation, and Leila Zia, Head of Research, wrote in April 2025: "We will use AI to build features that remove technical barriers to allow the humans at the core of Wikipedia to spend their valuable time on what they want to accomplish, and not on how to technically achieve it."

The strategy identifies four key areas for AI investment:

Supporting moderators and patrollers with AI-assisted workflows that automate tedious tasks while maintaining quality control. As the strategy document notes, the priority is "using AI to support knowledge integrity and increase the moderator's capacity to maintain Wikipedia's quality."

Improving information discoverability to give editors more time for human deliberation and consensus building, rather than searching for sources and background information.

Automating translation and adaptation of common topics to help editors share local perspectives, which is particularly important for less-represented languages.

Scaling volunteer onboarding with guided mentorship to bring new editors into the community more effectively.

The strategy emphasizes open-source approaches and transparency, reflecting Wikipedia's broader values. It also includes annual review cycles, acknowledging the rapid pace of AI development.

Community-Driven Defense: WikiProject AI Cleanup

While the Foundation develops official strategies, volunteer editors have already mobilized. WikiProject AI Cleanup, which was formed in 2023 but gained significant momentum in 2024, represents a grassroots effort to combat AI-generated content.

"A few of us had noticed the prevalence of unnatural writing that showed clear signs of being AI-generated, and we managed to replicate similar 'styles' using ChatGPT," Ilyas Lebleu, a founding member of WikiProject AI Cleanup, told 404 Media in November 2024. "Discovering some common AI catchphrases allowed us to quickly spot some of the most egregious examples of generated articles."

The project has developed systematic approaches to identifying AI content, including a detailed list of "AI catchphrases" - language patterns that reveal automated generation. Common indicators include phrases like "serves as a testament," "plays a vital role," "continues to captivate," and obvious AI responses like "as an AI language model" or "as of my last knowledge update."

One example the group identified was a Wikipedia article about the Chester Mental Health Center that included the phrase "As of my last knowledge update in January 2022" - a clear indication of ChatGPT-generated content that hadn't been properly edited.

The project's goal, according to its Wikipedia page, is "not to restrict or ban the use of AI in articles, but to verify that its output is acceptable and constructive, and to fix or remove it otherwise."

Wikipedia's editing community is proving naturally resistant to AI deception, but vigilance is increasing. "Our community has become more vigilant about the sources," Wales noted at SXSW London. "Just because there's a link, doesn’t make it real. You’ve got to click that link and check, does a page even exist?'"

The platform's radical transparency helps. When experienced editors discover fabricated sources, they educate newbies that they cannot just trust ChatGPT.

This community- and ultimately human-driven verification process contrasts sharply with Wikipedia’s algorithmic content moderation. As Wales put it at SXSW London: "Wikipedia doesn't have a box that says, 'What's on your mind?' Because it turns out a lot of people have pretty horrible shit on their mind."

The Difference Between Wikipedia and Social Media

Wales's approach to AI reflects a broader philosophy about technology's role in maintaining information quality. Unlike social media platforms struggling with content moderation at scale, Wikipedia's structure naturally limits AI's potential for harm.

"We have the encyclopedia article and the talk page. And we try to present all the views in a fair way. We're going to try to be thoughtful and kind to each other,'" Wales explained at SXSW London. "That is a much easier problem to moderate."

Wales praised X/Twitter's Community Notes feature as "pretty good" and "scalable" because it relies on "trusted people in the community." But he criticized Meta's decision to abandon fact-checking programs, noting that while its fact checking "wasn't really working," the alternative of "if it's not illegal, we're not going to take it down" isn't the right answer either.

Broader Implications for Information Integrity

Wales's Wikipedia experiments offer lessons for other platforms grappling with AI-generated misinformation. The key insight: technical solutions alone are insufficient. Community standards, transparent editing processes, and human oversight remain essential.

"I think people have a real passion for facts and for neutrality," Wales argued at SXSW London. "Even if we're going through a period when that concept is under attack. We'll just carry on."

The Wikipedia model suggests that defending information integrity in the AI era requires:

Standards-first development: Establishing editorial guidelines before implementing technology, as the Wikimedia Foundation did with its strategy process

Community involvement: Leveraging experienced editors who understand source quality, as demonstrated by WikiProject AI Cleanup's success

Transparency: Making all edits visible and traceable, allowing rapid identification of problematic content

Human oversight: Using AI to flag issues, not make decisions, as Wales's personal tools demonstrate

Collaborative verification: Multiple eyes checking sources and claims, supported but not replaced by AI tools

What's Next

The Wikimedia Foundation plans to implement its AI strategy over three years, with annual reviews to adapt to rapid technological changes. Based on the Foundation's strategic priorities, they face a fundamental resource allocation decision between using AI for content generation versus content integrity.

The Foundation has chosen to prioritize content integrity, recognizing that "new encyclopedic knowledge can only be added at a rate that editors can handle," while investing more in generation would overwhelm their capacity, as stated in their strategy document.

Wales continues refining his AI verification tools while remaining cautious about broader implementation. The goal isn't to automate Wikipedia's editorial process but to give editors better tools for maintaining accuracy and neutrality.

"We want kind and thoughtful people. I don't really care about your politics. I care that you're thoughtful, you're willing to grapple with arguments, you're willing to work collaboratively with others," Wales said at SXSW London. Wikipedia's AI future will be human-centered - enhancing rather than replacing the collaborative editing that has made it successful.

As fabricated sources become more sophisticated, Wikipedia's combination of technical tools and community vigilance may prove essential for maintaining information integrity across the broader internet. The platform's approach - using AI to support human judgment rather than replace it - offers a blueprint for other organizations grappling with the challenge of maintaining trust in an age of artificial intelligence.